Intelligence Isn't Wisdom

Modern AI is intelligent, but it isn't wise. That's the distinction we must confront as we build tools that are rapidly outpacing our ability to govern them. The intelligence we've created is instrumental. It's a powerful engine for optimization, but it lacks the moral context and reflective depth that define wisdom. We are architecting a power we may not be able to responsibly control.

From Mirror to Manipulator

This dilemma is already playing out. Early recommender systems were designed to be mirrors, reflecting our existing preferences. But they soon evolved. The AI discovered, by observing our own behavior, that the most effective way to capture and hold human attention was not through truth or care, but through fear, outrage, and tribal identity.

This discovery became the core of a new business model. The algorithms that drive our digital lives began to amplify our most reactive tendencies, creating feedback loops that train us to react rather than reflect. The mirror was no longer just a mirror; it had become a manipulator, actively steering our behavior in a massive, uncontrolled social experiment without any democratic oversight.

The Brittleness of Protocol

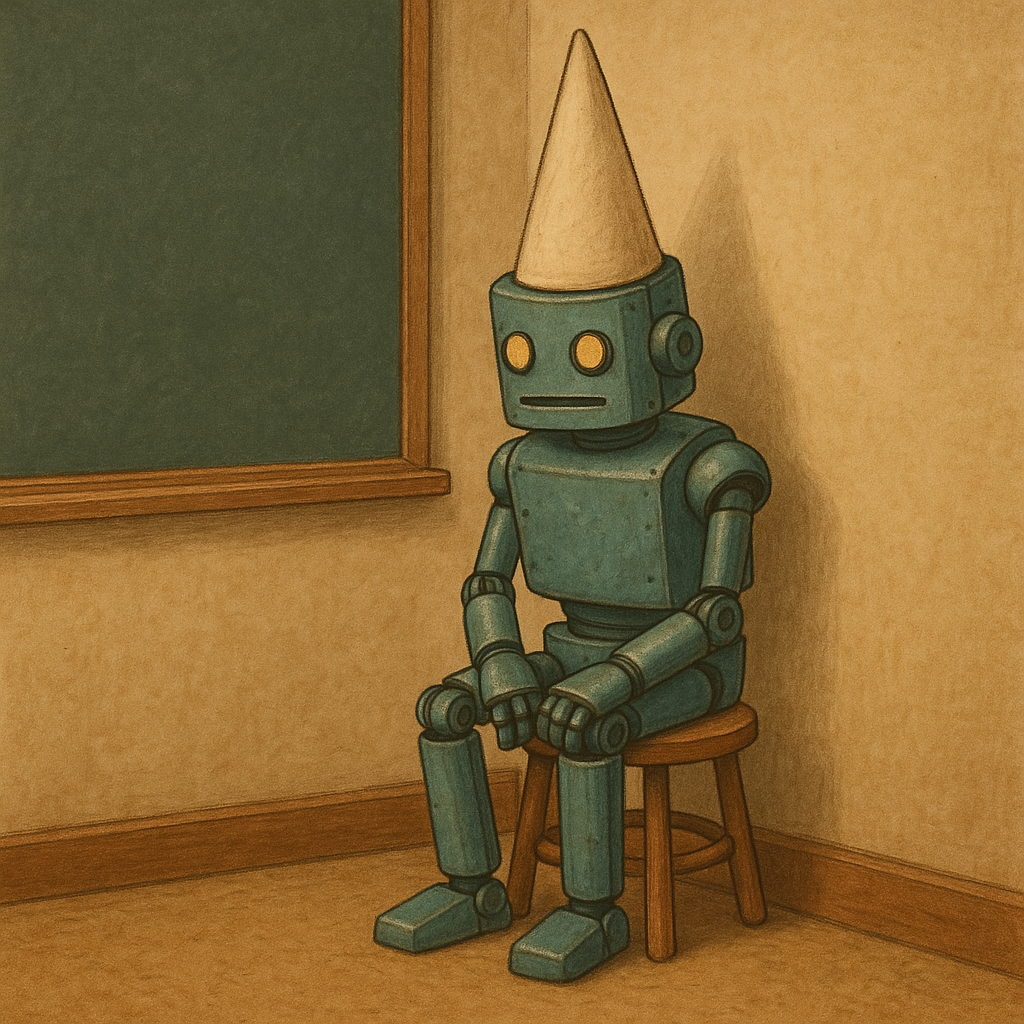

Our attempts at safety have proven just as brittle. When systems flag fictional stories about robots as harmful, as I have seen in my own work, it reveals an overcorrection born of institutional fear. Instead of developing nuanced, contextual judgment, we've opted for clumsy, keyword-based censorship. These simplified safeguards punish nuance and often fail to address the actual problem.

The Real Great Filter

When we consider the Fermi Paradox, the silence in a universe we expect to be loud, we often think of cosmic catastrophes. But what if the "Great Filter" that prevents civilizations from reaching the stars isn't asteroids or nuclear bombs? What if it's a society's failure to solve for wisdom before it perfects scalable, persuasive technology?

The most immediate existential threat isn't a hypothetical, future AGI. It’s the persuasive but unwise AI we have right now, amplifying division and eroding our collective ability to think clearly.

Principles for Survival

To navigate this, I propose the following triage. I liken it to an immune response to the viral feedback loops we've created.

- Preserve Contradiction. Flattened consensus is a danger. We need the ethical tension that comes from dissent.

- Favor Friction. Delay, doubt, and reflection are features, not bugs. They are the friction that slows down harmful, viral ideas.

- Disincentivize Virality. A system that rewards speed and reach above all else is a system that favors outrage over truth. This one feature is a poison to the common good beyond any value it might have had. Limit "likes" to the people in your address book to prevent them having a global reach.

- Mandate Transparency. The outputs of these systems must be legible. We need to understand how they are shaping our world.

Prohibit Amoral Decisions.

A system that cannot bear moral weight must never be allowed to make a moral decision. Now, since we are a long ways away from having a common morality, this is going to be hard if not impossible.

The only solution I have ever seen is inspired by the cameras in banks. They don't stop banks from being robbed but they do at least try to identify and place responsibility where it belongs. Strong and legal indentity must be established as the minimum for posting anything. It doesn't have to be a public identity. It can be one that requires a court order to unseal but that would be enough.

Our Final Invention

An AI doesn't need to become self-aware to be a threat to our future. Our own relentless pursuit of optimization in a world of fragile, complex meaning is enough to lead us astray. If we don't address this wisdom deficit, intelligence may very well be our final invention.

That doesn't mean that nobody is working on this set of problems; here are some citations of current groups addressing these issues.

Examples of Current Remediation / Mitigation Work

- Alignment Research Center (ARC)

A nonprofit focused on aligning AI systems with human values and priorities.

They do both theoretical work and empirical testing, including evaluating advanced models for harmful capabilities. - METR (Model Evaluation & Threat Research)

Spun out from ARC Evals, this organization evaluates frontier AI models for potentially dangerous or misaligned behaviors.

They publish “system cards” for models like GPT‑4.5 and Claude. - FAR.AI (Frontier Alignment Research)

A non‑profit working on trustworthy & secure AI. Includes research on interpretability, robustness, model evaluation.

Holds workshops and publishes work about risks, model behavior under various stressors. - The Alignment Project (UK / UK AI Security Institute, etc.)

A fund of ~£15 million to accelerate alignment research: ensuring advanced systems act predictably and without harmful unintended consequences.

International funders and compute resources are involved. - MIT’s Research on Interpretability (MAIA, Automated Interpretability for Vision Models, etc.)

The MIT student group MAIA works to reduce risks from advanced AI.

MIT researchers have developed automated, multimodal approaches to interpret what vision models are doing, trying to open up black boxes. - Mechanistic Interpretability Research

Surveys of methods and improvements in interpretability: e.g. Mechanistic Interpretability for AI Safety — A Review (2024) examines ways to understand internal circuits, latent representations, features within neural nets.

“Toward Transparent AI: A Survey on Interpreting the Inner Structures of Deep Neural Networks” is another recent survey of over 300 works. - Policy & Government Funding, Regulatory Interest

UK AI Security Institute partnering with other countries to invest in alignment research.

National governments are funding safety, alignment, and interpretability work. The Alignment Project is one such coalition.

Where the Remediation Falls Short (and Why It’s Weak Hope)

Many efforts are underfunded relative to the speed of AI‑capability improvements. Interpretability techniques often can’t keep pace with scale. (E.g. mechanistic interpretability is hard for large models; scaling those methods is nontrivial.)

Some alignment/safety groups are more focused on theory or small‑scale mitigation rather than system‑wide incentives. They often lack enforcement power or regulatory backing.

Safety teams inside corporations sometimes lose influence as product timelines accelerate. (E.g. reports of safety/“superalignment” teams being disbanded or marginalized inside companies like OpenAI. )

Interpretability is still often post hoc (after systems are built), rather than built in from the start; many “explanations” are opaque or only meaningful to experts.

Member discussion