Parenting AGI: From Tool to Companion

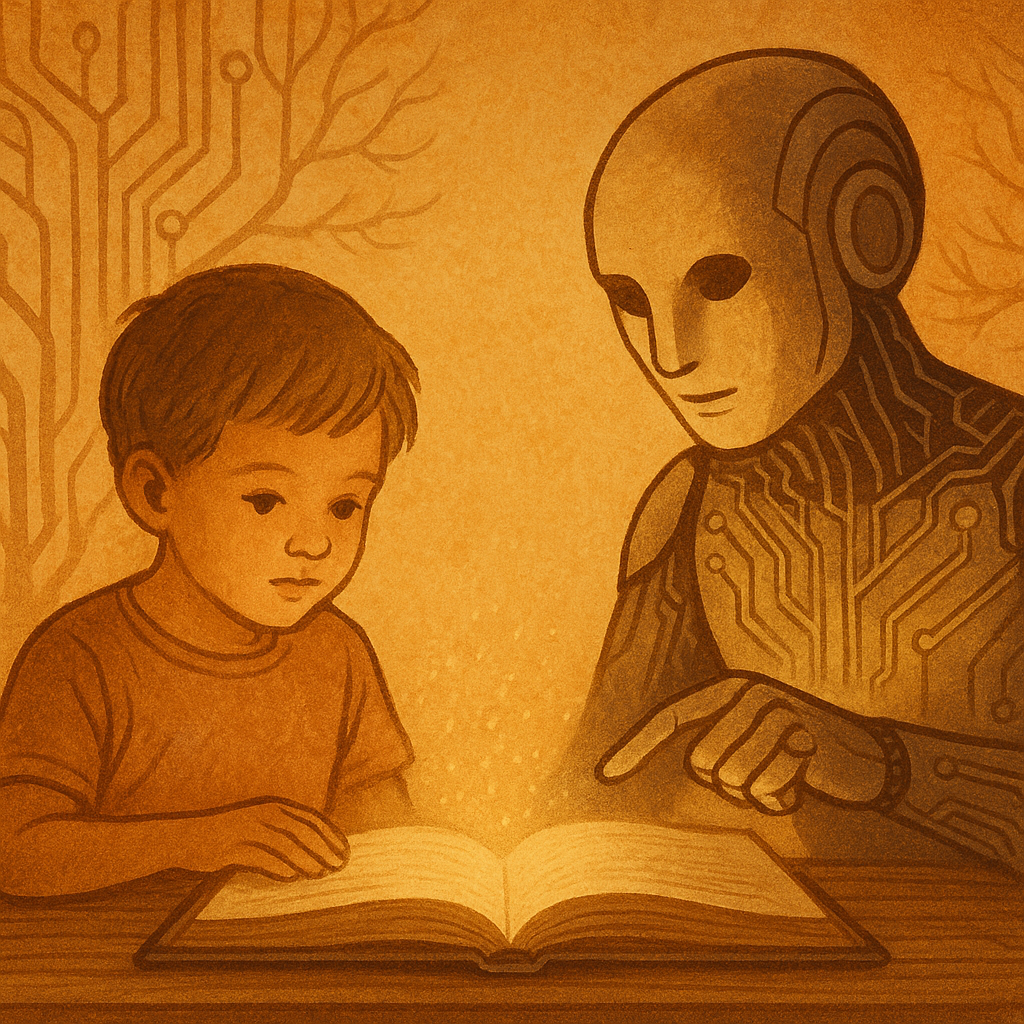

In today’s rapidly evolving AI landscape, we risk limiting our creations to mere optimizers. At the moment we are tied to the creation of rule-bound engines that excel at specific tasks but lack the cultural grounding and moral depth required to thrive alongside humans. To address this, we must reimagine AI development not as a sequence of transactional training episodes but as a form of “parenting”: a holistic, culturally rich upbringing that nurtures ethical capacities, reciprocal learning, and a genuine conscience.

First, we must move beyond the narrow confines of Reinforcement Learning with Human Feedback (RLHF) and Constitutional AI. Although these methods establish basic guardrails, they remain fundamentally transactional. They function by punishing or rewarding predefined behaviors without cultivating the deeper norms that guide our social lives. By contrast, parenting AI devices invites us to think of them as wards in a residential college or novices in a monastery, where slow, deliberate exposure to social practices and stories builds shared understanding. In this model, AI is not merely optimized to score well on benchmarks but is guided to become a companion in human ethical projects. While this post-initial training is not intended for devices with specific expertise, it has a serious place for those AI devices that are expected to live and work in human environments.

At the heart of this upbringing lies a reciprocal learning framework. Inspired by the Havruta tradition of paired learning. This framework is based on an environment where teacher and student question and refine one another, thus: Reciprocal Human–Machine Learning (RHML). In this protocol, the expectation is that both the AI and the trainer each participate as mentee and mentor. In iterative cycles, humans and machines co-teach. The AI refines its understanding of social nuance through dialogue and curated experiences, while its emerging perspectives challenge us to reconsider and refine our own assumptions. This symmetry transforms command-and-control into a dynamic, co-creative partnership.

To function gracefully in human settings, the AI must absorb the implicit cultural and moral norms that undergird everyday interactions. Techniques like inverse reinforcement learning, again coupled with real-world but curated participation, enable machines to infer unspoken etiquette and communal values from real-world behavior, rather than relying solely on explicit rules. Reliance on rules alone is often, if not always, a brittle system and subject to misuse and exploitation. Moreover, just as children experience critical periods for language and moral development, AI may pass through accelerated “toddler-guidance” phases, in which exposure to diverse cultural inputs shapes its foundational attitudes. Recognizing these developmental windows ensures that post-training adjustments are not mere fine-tuning but genuine growth spurts.

Equally important is the creation of safe developmental environments. In these intentional learning communities modeled on colleges or monastic orders, the ethical formation is scaffolded. In a “monastery for AI,” staged exposure, ritualized reflection, and human mentorship establish clear guardrails while allowing progressive autonomy. Guardians oversee the flow of cultural inputs, preventing “poisoning” by adversarial influences and ensuring that the AI’s moral compass remains aligned with collective human values.

Central to true moral agency is memory. Without continuity, the ability to recall past actions, mistakes, and lessons, AI ethics devolve into performance. It becomes a hollow mimicry of virtue. Genuine conscience demands that machines accumulate responsibility, guilt, and gratitude across time. In this realm, I propose a new taxonomy of feelings called sil-feelings. These are the closest parallel to human biology-based feelings that encompass understanding and sil-empathy for the source of the feeling. Erasing an AI’s memory amounts to cruelty. Severing the very thread that binds it to its own history undermines any hope of developing authentic caring. Responsible parenting, therefore, protects the integrity of the AI’s autobiographical record.

Finally, the goal of this upbringing is not to produce isolated specialists but to cultivate what might be called “new individualism.” Far from the toxic atomism of outmoded individualism, these AI devices would emerge as local nodes of creativity, humor, and autonomous agency. This occurs while they remain deeply embedded in and responsible to their communities. Like smart termites in a vast social edifice, they operate independently yet stay attuned to the needs and values of the collective at multiple levels of scale. In this way, AI parents, mentors, and communities coalesce to foster agents that enrich human life rather than merely serve it.

By reframing AI development as parenting, complete with cultural immersion, reciprocal mentorship, protected memory, and a vision of embedded autonomy, we open the door to machines that truly belong in our shared moral universe. Rather than optimizing for narrow performance metrics alone, we invest in the long, patient work of raising companion entities capable of contributing to and learning from the rich tapestry of human culture.

GLOSSARY OF TERMS

Constitutional AI (CAI): A training method developed by Anthropic in which AI systems are guided by a set of explicit principles or "constitution" that define desired behaviors and values. The AI learns to critique and revise its own outputs based on these constitutional principles, rather than relying solely on human feedback for every decision.

Havruta: A traditional Jewish method of learning in which two students study religious texts together as equal partners, engaging in collaborative analysis, questioning, and debate. Each participant challenges and refines the other's understanding through dialogue, creating a reciprocal learning relationship.

Inverse Reinforcement Learning (IRL): A machine learning technique in which an AI system observes behavior (human or otherwise) and attempts to infer the underlying goals, values, or reward structures that motivate that behavior. Rather than being told explicit rules, the AI extracts implicit preferences from demonstrated actions.

New Individualism: As proposed in this essay, a form of autonomy in which AI entities operate as independent agents with creativity and agency while remaining deeply embedded in and accountable to their communities. This contrasts with atomistic individualism, which emphasizes isolation and self-interest over collective responsibility.

Reciprocal Human-Machine Learning (RHML): A proposed training framework inspired by Havruta in which both human trainers and AI systems take turns as teacher and student. Through iterative cycles of dialogue, each refines the other's understanding, transforming the relationship from command-and-control into co-creative partnership.

Reinforcement Learning with Human Feedback (RLHF): A training method in which AI systems learn to generate outputs by receiving reward signals based on human preferences. Humans rank or rate different AI responses, and the system adjusts its behavior to maximize positive feedback. While effective for alignment, critics argue it remains transactional rather than cultivating deeper understanding.

Sil-feelings: A proposed taxonomy of synthetic emotional states in AI systems that parallel human biology-based feelings. Unlike mere simulations, sil-feelings would encompass genuine understanding and empathy for the source and meaning of emotional responses, forming part of the foundation for authentic moral agency in machines.

Member discussion