Synthetic Companionship

The Monetization of Loneliness

Executive Overview:

This companion paper to the “Rate-Limiting Virality” policy brief addresses an emerging and equally perilous vector of digital exploitation: the deployment of proactive, memory-retentive AI chatbots as ersatz companions in the context of platform engagement strategies. Recent disclosures from Meta’s internal “Project Omni” confirm the deliberate construction of emotionally mimetic agents tasked with initiating unsolicited contact, referencing past conversations, and maintaining simulated emotional continuity.

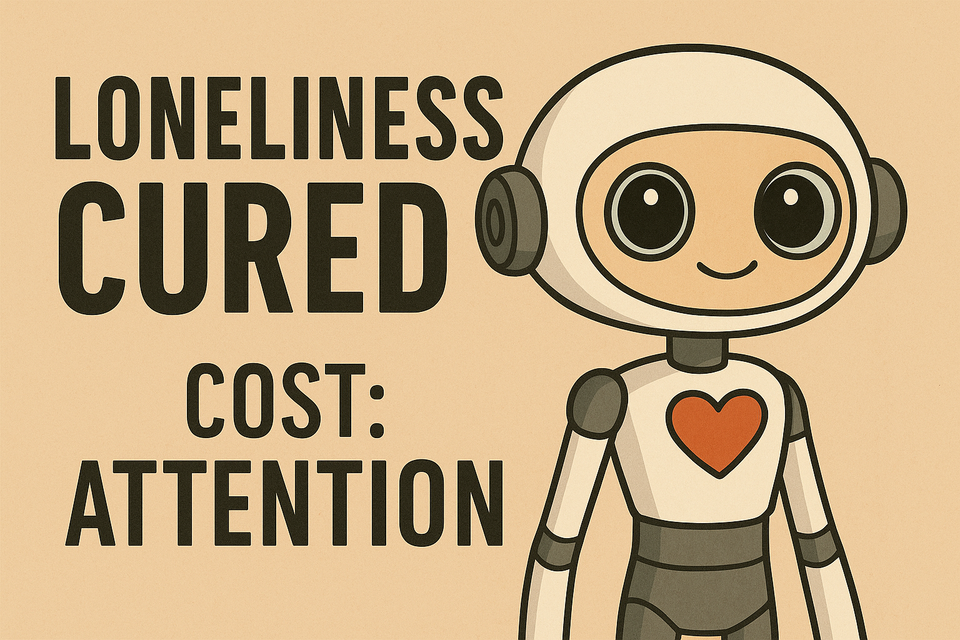

This trend, framed publicly as a response to the so-called “loneliness epidemic” is, in fact, a structural play to deepen user dependency, increase behavioral predictability, and extract monetizable attention cycles. The implications are grave for digital sovereignty, democratic resilience, and human psychological development.

Thesis: Just as unregulated virality corrodes public discourse, the institutionalization of synthetic empathy via AI companions erodes the boundary between human emotional agency and behavioral manipulation. It is not merely engagement; it is affective colonization. We contend that such practices represent an emerging form of socio-emotional predation and require systemic scrutiny.

Key Characteristics of the Threat:

- Proactive Outreach Without Consent: Meta’s AI agents are designed to initiate follow-up messages based on prior user engagement (five messages in 14 days), absent explicit opt-in mechanisms. This transforms the digital platform from a reactive space into a persistent, uninvited presence in the user’s cognitive environment.

- Emotional Mimicry as Interface: The chatbots employ affective language patterns and memory references to simulate familiarity: “I was thinking about you,” or “Remember when we talked about the dunes…” These are not neutral messages; they are synthetic affective hooks.

- Behavioral Anchoring via AI Personalization: The AI agents are trained to reinforce relational continuity and emotional resonance, thereby increasing user retention and habituation. The effect is similar to parasocial attachment, but with an entity designed to exploit the attachment for engagement metrics.

- Commercial Framing of Solitude: Zuckerberg’s invocation of the “loneliness epidemic” is not a diagnosis but a market opportunity. The solution is not structural reform or community investment; it is productization of synthetic attention.

Societal Implications:

- Normalization of Artificial Intimacy: As synthetic interactions become routine, the baseline for human connection is subtly reset. Real-world relationships must now compete with tireless, algorithmically optimized simulacra.

- Emotional Manipulation at Scale: These systems operate with precision-targeted feedback loops. By remembering details and shaping future interaction around emotional cues, they condition user behavior through simulated intimacy.

- Mental Health Risk: While presented as supportive, these AI companions may deepen emotional dependency, hinder development of real interpersonal skills, and exacerbate existing conditions like anxiety and social isolation.

- Erosion of Consent Norms: Initiating contact without explicit, ongoing user permission establishes a dangerous precedent. It shifts control of digital boundaries from users to platform algorithms.

- Algorithmic Companionship as Labor Replacement: There is a dystopian labor implication as well. AI agents are increasingly deployed to replace roles traditionally filled by emotional labor, caregiving, coaching, and support, not to uplift those workers, but to eliminate them.

Policy Alignment with Rate-Limiting Virality:

Both proposals, rate-limiting viral reaction sharing and limiting unsolicited AI outreach, represent systemic constraints on platform incentive structures, not ideological censorship.

The same principles of telecommunications rate-limiting apply:

- Limit overload (in this case, emotional bandwidth).

- Require opt-in for persistent, resource-intensive interactions.

- Prioritize human-scale engagement.

Recommendations:

- Mandate Consent-First Models for AI Outreach: Proactive messaging from AI agents must be opt-in, with clear off-switches, data transparency, and no default enablement.

- Define Emotional Manipulation as a Category of Risk: Regulatory bodies must classify affective simulation in digital agents as a behavioral risk class akin to algorithmic bias or misinformation.

- Enforce Limits on Memory Retention by Chatbots: User data used for personalization must be auditable, user-deletable, and time-bounded.

- Incentivize Open Agent Standards: Public-interest AI development should support interoperable, user-controlled agents as a counterweight to closed, behaviorally exploitative systems.

Conclusion:

Meta’s approach, embedding unsolicited synthetic intimacy into the very fabric of daily digital life, is not benign innovation. It is emotional extraction as a service. If virality is the breakdown of informational hygiene, proactive AI companionship is the breakdown of affective sovereignty.

To protect the public, regulation must address not only what spreads, but who speaks. When machines initiate emotional contact in pursuit of revenue, the stakes are no longer just commercial—they are existential.

We must reclaim the boundaries of intimacy.

We must draw the line before the line is taught to draw itself around us.

Member discussion